Every new idea starts with a hypothesis. You come up with an explanation of why it could work, then validate it with a lean experiment.

This sounds like a pretty straightforward, non-controversial way of building products, right?

But here’s a problem: When our entire focus is on a single hypothesis, it can blind us from exploring other possibilities. As a result, we tend to pick up every small signal to justify it (confirmation bias).

This isn’t necessarily a concern for early-stage products. At that stage, any signal is better than nothing. However, if you are building a product at scale, it can mislead you into making irreversible decisions.

So, how do you avoid this trap?

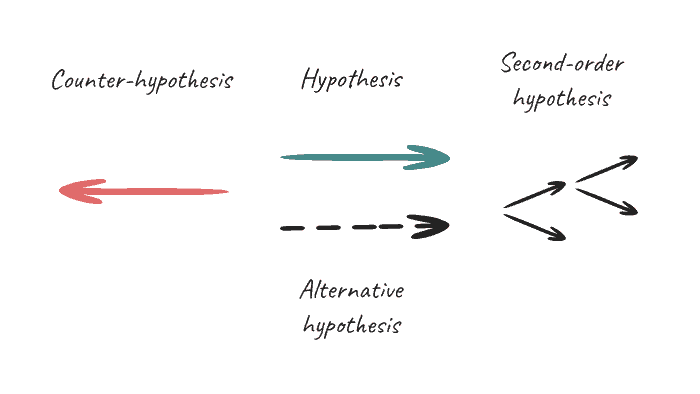

A good solution is to force yourself to come up with additional hypotheses from different angles BEFORE the validation begins:

- Counter-hypothesis

- Alternative hypothesis

- Second-order hypothesis

Together with the main hypothesis, I call this the 4-hypothesis framework.

Counter-hypothesis

“Why might the idea not work, or worse, have a negative impact?”

A counter-hypothesis acts as a sanity check. Your goal is to play devil’s advocate against your idea to test how strongly it stands. If you can explain why your idea might fail, and there is existing evidence to support this rationale, you might want to re-evaluate the idea’s priority.

You will also get a sense of how “risky” the idea is. In other words, is there any chance that it can hurt your product?

The risk level should inform your choice of validation method:

If the risk is high, you might want to start with a smoke test, close beta, or small-scale A/B test.

If the risk is low or non-existent, you can do a full-on A/B test or launch it directly.

The purpose of counter-hypothesis isn’t to stop you from testing new ideas. On the contrary, it is meant to help you prioritize high-quality ideas and test them with confidence.

Even if an experiment fails, which happens 86% of the time, you will have a potential explanation to base your next idea on.

Alternative hypothesis

“Could the idea work, but not for the reason I thought?”

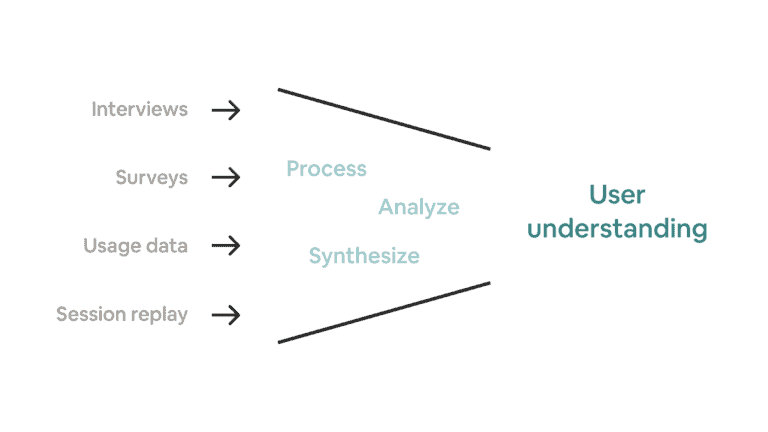

We often focus on validating whether an idea works but not why it works. However, the latter is just as, if not more, important.

You might be wondering, “If the idea works, why does it matter?”

Well, it doesn’t…if this is the only idea you will ever have. But I doubt that’s the case.

Successful products are built on top of continuous, compound learning. Every proven hypothesis should contribute to your broader product strategy. If you credit your past experiment success to the wrong reason, any future decisions based on that learning will suffer. In statistics, this is called a type III error, and it can also be seen as a form of survivorship bias.

Unfortunately, it’s not always easy to validate “why” an idea works. That’s why you should test the same idea from multiple angles, such as combining behavioral (what users do) and attitudinal (what users say) research methods, before making it part of your long-term product strategy. Even after that, you should never exclude the possibility that all of your previous conclusions could simply be wrong.

Second-order hypothesis

“Could the idea work, but introduce unintended effects?”

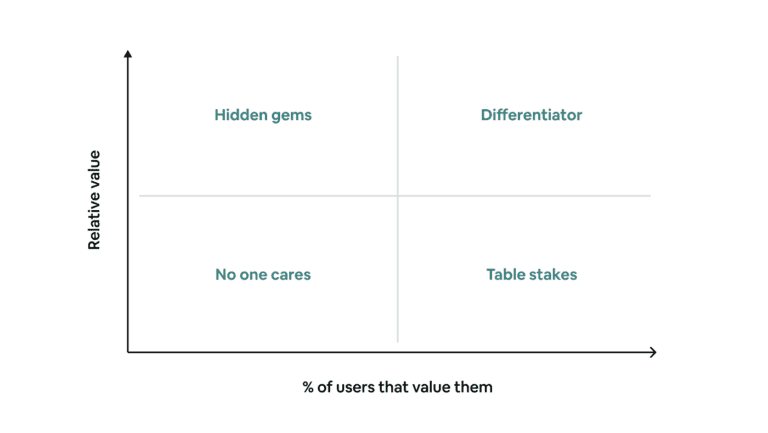

Besides the primary outcome you hope to achieve, an idea can also introduce second or third-order effects:

- Raising prices might hurt acquisition and retention.

- Tailoring the product’s onboarding flow for one group of users might harm the experience of another.

- A new feature might expand product value but increase product complexity.

If you don’t anticipate possible second-order effects before the experiment, you won’t know what to measure. As a result, those effects will go unnoticed, and you won’t be able to make a holistic decision that considers the net impact of an idea. This often leads to a situation in which you “win the battle but lose the war.”

Example: Personal finance app

Let’s pretend you are a product manager for a personal finance app.

You have an idea to introduce “smart budgeting” as the default option over manual budgeting to increase the % of users who reach their monthly savings goals—a metric highly correlated with retention. You believe the best way to validate this idea is by running an A/B test among new users.

Main hypothesis

Most users don’t know how to set realistic, attainable budgets. Using machine learning to set budgets based on users’ demographic and spending data will produce better results.

Counter-hypothesis

Users might not understand what smart budgeting means. If they don’t trust it, they won’t use it. –> A complementary survey to understand users’ perception of the feature will be helpful.

The underlying AI might not be good enough. –> We need to run simulations first to make sure it performs better than manual budgeting.

Alternative hypothesis

Could the experiment work because smart budgeting has a simpler setup flow? –> We need to break down the funnel to measure Setup Started > Finished % and Setup Finished > Saving Goal Reached % to isolate the cause.

Second-order hypothesis

Could it be that smart budgeting optimizes for lower targets, so they will be easier to hit? However, hitting them will no longer provide the same value. –> We need to measure the retention rate of users who hit their savings goals to see whether the correlation weakens.

As you can see, these four types of hypothesis help you uncover extra considerations in your planning, so you can get the most out of the experiment.

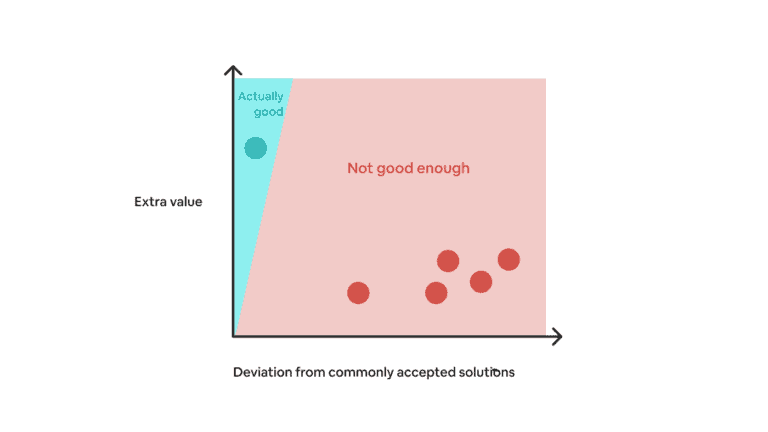

It will take some practice to get a hang of thinking “against” your brilliant ideas, but once it becomes second nature, every experiment you run will return 2-3X the value.

Next time you plan to test a new idea, give this framework a try.