“I’ve invested too much to quit now.”

This phrase is commonly heard in investing, gambling, and pretty much any activity that requires investment before one sees an unguaranteed return, including product development.

However, the rationale behind such thinking is flawed. When the return is no longer relevant or clearly unachievable, the cost you’ve incurred should not be factored into the current decision.

Yet, most people have trouble calling it quits because they do not want to give up their prior investment. As a result, they end up suffering even bigger losses.

This irrationality is called the Sunk Cost Fallacy, and it’s one of the deadliest traps when building a product.

How can the sunk cost fallacy hurt a product?

Here’s a common misconception among product teams:

“If we have started building a feature, we should finish it. If we have launched it, we should keep it. It can’t hurt, anyway. Otherwise, our effort will go to waste.”

This line of thought is incorrect for a few reasons.

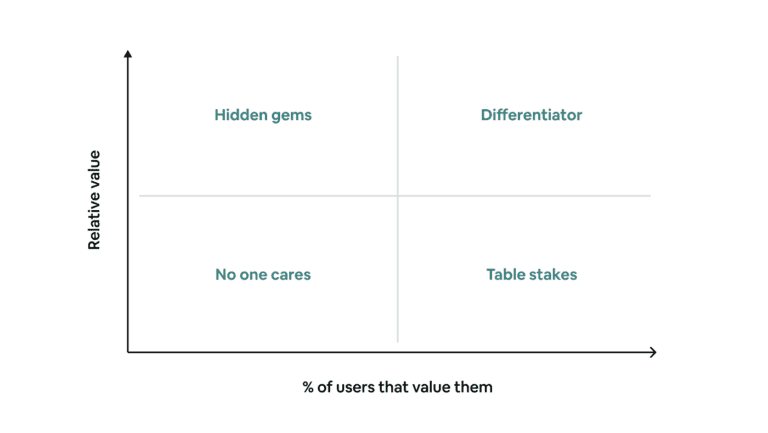

First of all, shipping the wrong feature can have a net negative impact on your product through feature cannibalization or increased complexity. This is why unshipping is sometimes beneficial.

But even if that’s not case, you should always think about the opportunity cost. In other words, what could you have done with your time instead? Product teams that prioritize high-impact work will always beat teams that don’t.

Lastly, the sunk cost fallacy can also damage your product by creating “false wins” to misguide your team. When everyone keeps building on top of initiatives that should’ve been killed a long time ago, your product will eventually collapse.

Why does the sunk cost fallacy happen?

In the context of product development, the sunk cost fallacy usually happens for a combination of reasons:

- No success criteria — Most product teams don’t have a proper framework for evaluating feature success. Even when they think they do, the criteria are often arbitrary. When there is no clear indicator of whether a feature has succeeded or failed, the default action is to continue on the same course.

- Self-serving bias — Nobody likes to see their effort turned to dust. It can be a huge blow to their self-esteem. That’s why once someone has committed to a project, they tend to cherry-pick only the positive signals to justify its continuation.

- Not knowing how to validate ideas early — The more you have invested, the harder it is to quit. Many product teams say they follow the Lean Startup approach of validating ideas early via MVPs. However, 9 times out of 10, the MVPs they’ve built are far from “minimal”.

- “Sacred Cow” ideas — When a product team is assigned a solution to execute rather than a problem to solve, they might assume that the senior leadership has gone through intensive planning, which makes the idea too valuable to give up. Besides, they don’t really have the option to change course anyway.

How can product teams combat the sunk cost fallacy?

Pre-mortem & Post-mortem

Get into the habit of evaluating features objectively by implementing pre-mortem and post-mortem meetings.

A pre-mortem asks the team to define what success for a particular feature will look like, why it might fail, and what to do in each scenario before the development even begins.

As a rule of thumb, your success metric should reflect why you build that feature in the first place. For example, if you believe a feature is a must-have for your enterprise users, then the success metric should be something like “70% adoption rate among companies with 1000+ employees”.

A post-mortem is where the team evaluates how this feature actually performed, why it failed or succeeded, and what to do next. Because you’ve laid out the criteria beforehand, it’ll be a lot harder to cherry-pick the result.

Also, make sure you allow enough time for a feature to show its effect. If you call a post-mortem meeting immediately after its release, all you can talk about is the development process (output) but not the impact (outcome).

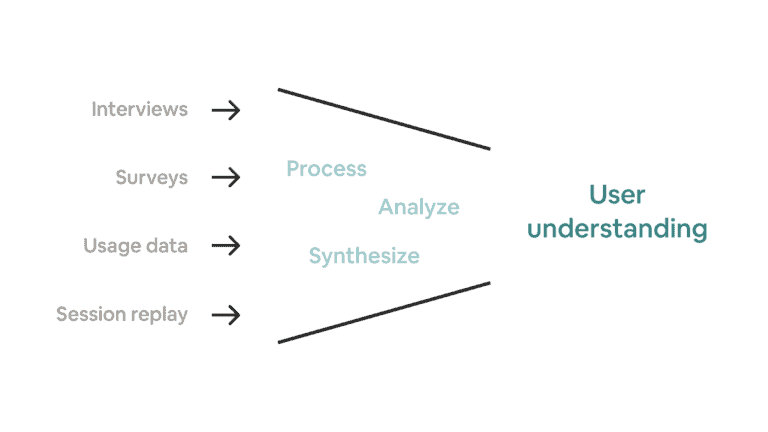

Expand idea validation methods

Challenge your team to validate ideas as early as possible, ideally without writing a line of code. For example:

- Smoke test — create a fake CTA or landing page to assess user interest.

- Wizard of Oz test — manually operate a high-fidelity prototype to verify design usability.

- Concierge MVP — offer a human-operated service to gather user feedback.

Although these methods don’t offer the same level of confidence as an A/B test or a full feature release, they do let you gather useful insights for a fraction of the cost. Remember, the less you have invested, the easier it is to change directions.

You can’t expect your team to master a wide array of idea validation methods overnight, so make sure to dedicate some time toward training in parallel.

Foster a good product culture

Giving up feels terrible, even if it’s the right move to make. The only way to mitigate this blow to team morale is to foster a good product culture.

Help your team understand that building a product is about creating value, not shipping features. Instead of focusing on the time lost, focus on the lesson learned (and not in a “pity” way).

Over time, whenever people have to kill an initiative, they’ll begin to think, “Hey, it’s good that we found out what doesn’t work. We can now divert our resources to trying something else!”

Of course, building a culture is hard. If you are a product leader, it is your responsibility to promote the right mindset through contextual mentorship and processes. Most importantly, you must lead by example and not fall into the sunk cost trap yourself.

But here is the tricky part…

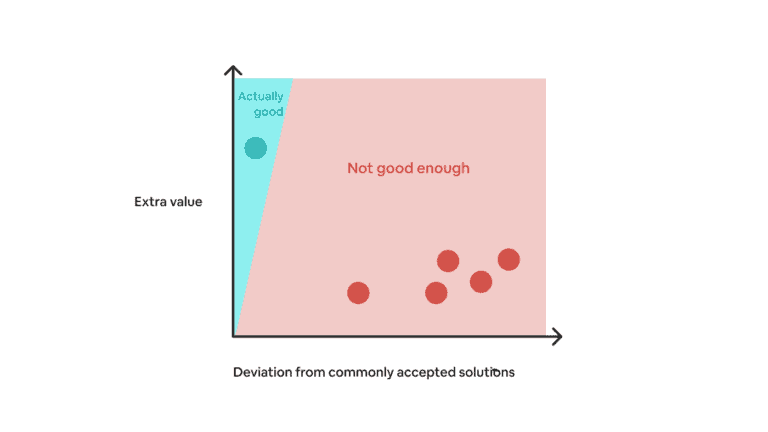

It’s not always easy to determine whether a feature didn’t work because it was a bad idea or because you didn’t invest enough. In the second scenario, giving up too early will, in fact, throw your valuable work down the drain.

Although there isn’t any hard-and-fast rule, here are two factors you can look at to determine which is the case:

- Expected rate of return — Not every initiative will generate returns at the same rate. For example, it might take only two weeks to see the impact of a new onboarding flow, but it might take years for a developer platform to gain meaningful traction.

- Target vs actual — How far is the result from your definition of success? Was the bar set too high? Are there any signals that partially validate your hypothesis?

In many situations, a definite answer does not exist.

Sometimes you win by cutting losses ruthlessly. Other times, you win by doubling down on a strategy despite not seeing early results. The point is to be aware of the sunk cost fallacy so that you can make the most deliberate decision in each unique context.